Built for scale

Optimus is an easy-to-use, reliable, and performant workflow orchestrator for data transformation, data modeling, pipelines, and data quality management. It enables data analysts and engineers to transform their data by writing simple SQL queries and YAML configuration while Optimus handles dependency management, scheduling and all other aspects of running transformation jobs at scale.

Zero dependency

Warehouse management

Extensible

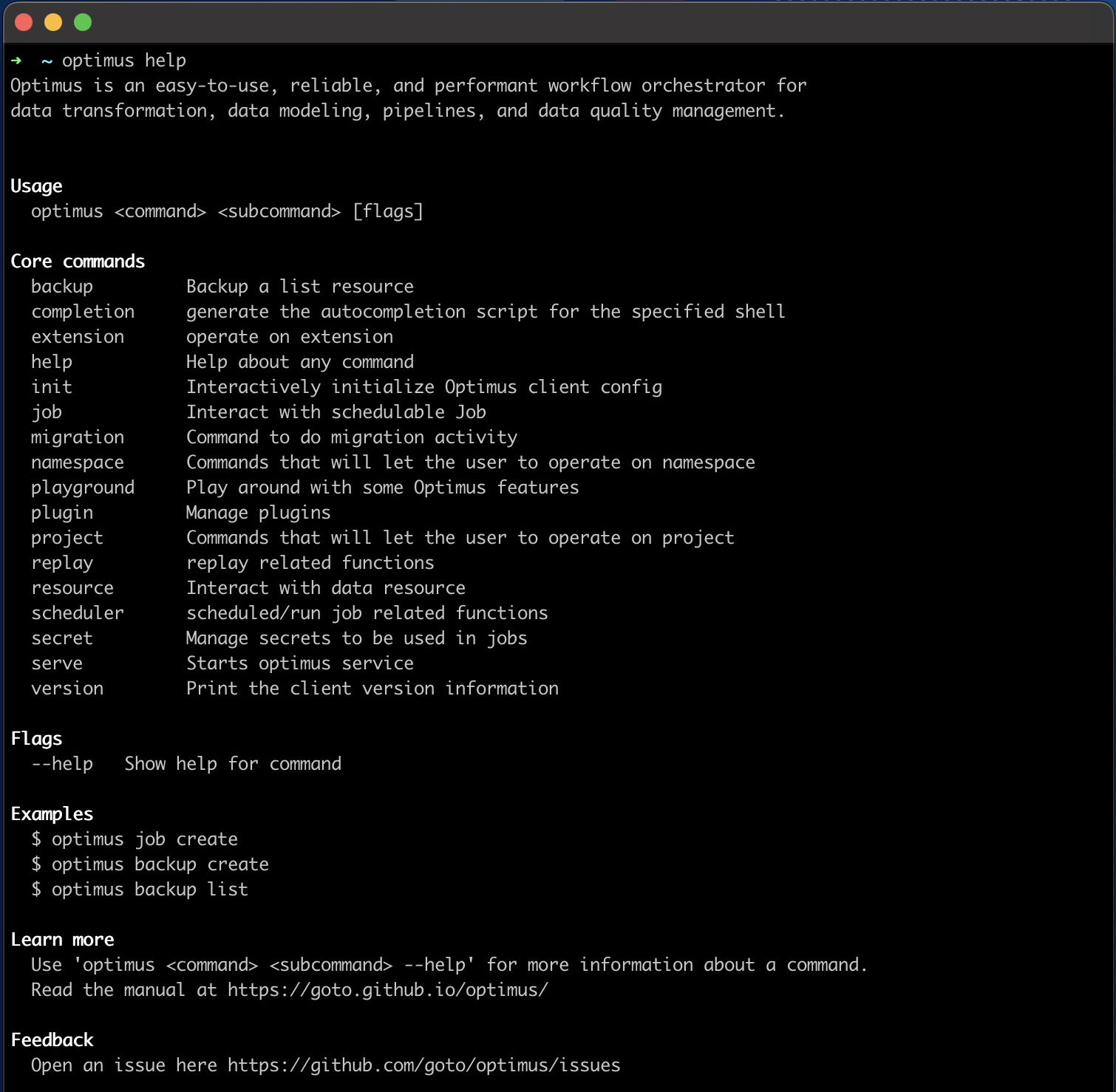

CLI

Proven

Workflows

Key features

Optimus is an ETL orchestration tool that helps manage warehouse resources and schedule transformation over cron interval. Warehouses like Bigquery can be used to create, update, read, delete different types of resources(dataset/table/standard view). Similarly, jobs can be SQL transformations taking inputs from single/multiple source tables executing over fixed schedule interval. Optimus was made from start to be extensible, which is, adding support of different kind of warehouses, transformers can be done easily.

Scheduling

Dependency resolution

Dry runs

Powerful templating

Cross tenant dependency

Hooks

Workflow

With Optimus data teams work directly with the data warehouse and data catalogs. Optimus provides a set of workflows which can be used to build data transformation pipelines, reporting, operational, machine learning workflows.

Develop

Test

Deploy